Logic versus Computer Code, Why pure functional programming is the future

There and back again

Back when I was sitting in high school class bored with my TI-83 programming text based games, I realized I wanted to make something better. For me, better meant better graphics and faster execution. I wanted to make exciting action games. The first thing I did was take my existing BASIC knowledge and try to output graphics as text using small almost pixel like characters.

I ran into some obvious problems. It was slow and looked funny. I knew what I wanted the computer to do, it was just doing it poorly. I had to take the reins myself and tell the CPU what to do. So, I began my adventure into z80 assembly. It was tough. I found myself printing out code on paper to work on it during class to find new ways to gain a few CPU cycles in my methods. I eventually wrote better faster games and learned more about CPUs. It provided me with a low level foundation I’ve used my entire career and even today I’m aware there is a certain respect for this knowledge. I was pretty happy about it and thought I was a great programmer. I was completely wrong.

My only ‘released’ TI-83 game in all its black and white glory.

After awhile I started writing more complex programs and realized that it was difficult to write in assembly. After all I didn’t want to have to write a division method every time I moved to a new system. When I started writing in C++, I started to realize how valuable the compiler was. It took a ‘high level’ operation like “a / b” and converted it to an optimal machine code in whatever system it needed to. I really appreciated things like “for” loops, “if” statements and even function calls. It provided me a better way to write my logic and let the computer take care of the hard work of figuring out how to optimally execute it.

Of course the compiler wasn’t perfect and as I started my career I found places people dropped back into assembly to tell the computer exactly what to do. I remember engineers who would insist on manually inlining functions because of earlier compiler versions that would fail at this. Working making video games there was also a certain pride from optimally managing memory pools and little respect for programmers using memory managed languages where they didn’t have to deal with this problem. I fell into this trap again and felt the hardest problem I had to solve was telling the computer how to execute my code in an optimal way.

At the same time I was hitting bugs like I never had before. In my student projects I had some hard bugs, but it wasn’t a big deal. Bugs weren’t the end of the world.

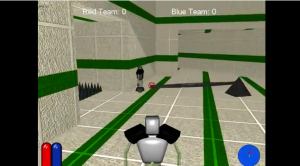

“Hey, the attack only works 50% of the time” “Huh, thats 50% more than I thought it would, ship it!”. Equanimity © 2010 DigiPen (USA)

They didn’t cost thousands or millions of dollars. No one was laid off due to crashes. In the real world, I found myself working around the clock fixing critical issues. It was about more than just my own job. Bugs and software failures had a massive impact on all the co-workers I had become close to. I almost burned out a few times (maybe I actually did) and I started to think there had to be a better way. I looked at testing. I thought, “Maybe if I tested everything perfectly, I would never run into a bug again”.

I looked at different applications and testing strategies and tried to apply them in the area I had a passion for, video games. I became frustrated. There were too many branches, too many mutating states, too many external systems. I could achieve “100% code coverage” and still not hit tons of critical bugs. It seemed like insanity.

Finally after a conversation with a friend, I found a beacon of light. Functional programming. Other developers had run into this problem too and made languages that solved this. There was no more mutating memory and no more side effects. Finally, a system I could test. I looked at these languages and thought about writing games in them. That was when I realized, while this would work, it would be incredibly slow to execute. I was back where I was when I started my journey.

Two Steps

Looking back, I missed the bigger picture in high school. The class I often spent the most time coding was my Math class. I remember thinking, “Well, this stuff is easy and simple. Why should I listen or look more into this when there are more practical and harder problems in my assembly code?”. What I missed is there are two parts of coding. One is the logic of what you want to do, the other is translating that logic to a language the computer understands. When I was writing the assembly games was I knew logically what I wanted to do. I could write it out in BASIC like I did before. What was hard wasn’t the logic, it was telling the computer how to optimally execute my logic. Thats true for anything simple, but complex logic requires different tools.

The Language of Logic

Before computers, logic problems were solved all the time. We’ve been devising a system for reasoning about these problems for thousands of years called Mathematics. We may have some new systems to add to the mathematical language, but a large portion of it is very relevant to writing complex systems.

Even many programming patterns and algorithms have a strong mathematical basis. Any time you hit a hard problem chances are someone already solved it years ago using math.

Too often though, we get caught up in telling the CPU what to do. We forget to look at making sure what we’re trying to do makes sense.

The Translator

The process of taking the logic and changing it to machine code is called compiling.

The reason programming languages are not able to fully separate from writing bits and bytes is that compilers are not quite ready. C/C++ is a great example of this. The developer shouldn’t have to think about whether or not the compiler will inline a function. We’re getting better, but the reality is we’re not there yet.

The Future

If you look at most programming languages they operate on both of these concerns. They provide ways for you to write what you logically want to do and tell the computer how to do it. This can be a big problem. Take the pseudo-code:

int x0 = 0; x0 = update(x0); //adds 1 move(x0,y);

and

int x0 = 0; int x1 = update(x0); //adds 1 move(x1,y);

Logically they both have the same result, the first one just tells the computer, “Hey, you can go ahead and put this result back at this address, because I’m done using that object now”. While the second one says, “I might use x0 later, lets not delete it yet”. Given the rest of that function, the compiler should be able to determine if x0 is used again and make the decision to re-use the memory as an optimization.

Functional languages have started to become popular lately and there is a good reason for that. As we hit harder problems and break assumptions made about execution order, describing logically what we are doing has become more important. Functional languages are great at this as they stick to logic and provide ways of describing that logic.

Sadly, we still have quite a bit of work to do on the compiler side before it can compete with low level languages. That being said, I’ve found it useful to prototype even systems with high performance requirements in functional languages to make sure my logic is sound before moving into other languages. In fact, I think everyone should learn a functional language. Its a bit easier than going back to your math books and trying to piece together how category theory helps you write a web server.

It will be an interesting change, but maybe in 10-20 years the idea of large amounts of coding in languages that mix logic and computer code will seem as crazy as writing large amount of assembly code does now.